While it may be challenging to determine the downstream effects of COVID-19 on technology, one clear result is that institutions have accelerated efforts to marshal existing technologies to support teaching and learning. This includes maximizing the interoperability of these solutions and ensuring that any new technologies deployed will improve agility to respond to new initiatives, like increased hybrid learning.

These developments remind us of Educause’s 2015 Next Generation Digital Learning Environment initiative that proposed a way for institutions to develop interoperable teaching and learning ecosystems. It promoted a seamless user experience, easy navigation among integrated systems for students, customization of learning environments, and transferring data smoothly between tools for instructors and program administrators.

But given pandemic challenges, are institutions heading in this direction? And if they are, which technologies are they

Our Approach

To understand how institutions are piecing together their digital learning ecosystems, we began by examining technology solution implementation data from our partner, LISTedTech, for 2,073 public and private four-year institutions. This data shows us how many institutions across the U.S. implement the eight types of solutions that we classify in our Higher Education Technology Landscape as part of a digital teaching and learning ecosystem. These technologies range from Learning Management Systems (LMS) to Assessment Integrity to Digital Courseware (see below).

But looking at implementation only tells part of the story. To better understand how institutions arrange their ecosystems—how many of these solutions they use and in what combinations—we also divided these institutions into groups or clusters based on statistically meaningful commonalities found among their technology implementations. The definitions of the eight types of solutions we included in our analysis are:

- Assessment Management. Solutions within this segment enable institutions to collect, manage, and report data related to student learning outcomes assessment and include products such as AEFIS (AEFIS), Taskstream (Watermark), and SmarterMeasure (SmarterServices).

- Productivity and Collaboration. Solutions in this segment enable groups of people to work together by sharing information, including conferencing applications, collaborative applications, and email applications, and include products such as Zoom (Zoom), Teams (Microsoft), Collaborate (Blackboard).

- Learning Management Systems. LMS delivers and manages instructional content, identifies and assesses individual and organizational learning or training goals, tracks the progress toward meeting those goals, and collects and presents data for supervising learning processes. Products within this segment include Learn (Blackboard), Brightspace (D2L), and Canvas (Instructure).

- E-Portfolio. These solutions allow for the creation and management of student work compilations to evaluate coursework quality and academic achievement and create a lasting archive of educational work products. These solutions include products such as Pathbrite (Cengage Learning), Professional Portfolio (FolioTek), and Portfolium (Instructure).

- Learning Analytics. Learning analytics solutions support education objectives by analyzing data related to student engagement and academic achievement and include LoudCloud (Barnes & Noble), Performance Plus (D2L), and Engage (Blackboard).

- Assessment Integrity. Solutions within this segment help validate the management and submission process to ensure the integrity of assessment and other submitted work and include products such as Turnitin for Higher Education (Turnitin), SafeAssign (Blackboard), ProctorTrack (ProctorTrack).

- Online Course Solutions. Online course solutions design and create online courses for institutions to publish and teach online courses and include products such as Coursera (Coursera), Udacity (Udacity), and Top Hat (Top Hat).

- Digital Courseware. Digital courseware solutions provide sequenced instructional content to support an entire course's delivery and include products such as MindTap (Cengage Learning), Intellus (Macmillian), and Fulcrum Labs (Fulcrum Labs).

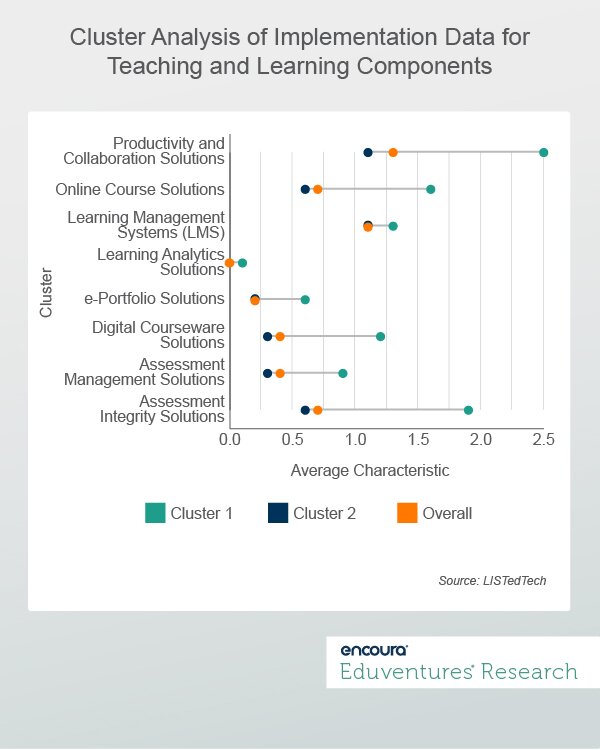

Figure 1 shows the results of our cluster analysis, and specifically, the average number of implementations of each type of solution included in each cluster of schools.

Overall, we discovered that these ecosystems fall into fewer clusters than you might predict. While one might imagine a diversity of ecosystem implementations across such an extensive set of institutions, this showed that there are only two main types of digital learning ecosystems across most schools.

Cluster 1, which we call a "Full-Stack" ecosystem, consists of 2035 schools, the majority of which are public institutions (55%). As shown in Figure 1, this cluster is comprised of schools with higher-than-average first-time, full-time degree/certificate undergraduate enrollment (1,976) and a smaller-than-average percentage of students enrolled in distance education (28%) than the second cluster. Full-Stack schools have higher-than-average implementations across all eight ecosystem segments, with an exceptionally high number of Assessment Integrity Solutions, Online Course Solutions, and Productivity and Collaboration Solutions implementations.

Cluster 2, or "Online-Focused" is the smallest of the two groups with 238 schools, the majority of which are private schools (60%). This cluster is comprised of schools with a smaller-than-average first-time, full-time degree/certificate undergraduate enrollment (322) and a higher-than-average percentage of students enrolled in distance education (35%) than Cluster 1. Online-Focused schools prioritize online learning delivery while ensuring that students adhere to academic integrity expectations (avoiding plagiarism, etc.). While these institutions deploy products within the Productivity and Collaboration Solutions, Digital Courseware Solutions, and Assessment Integrity Solutions segments more than any other segment, the average deployment of each of these solutions is still less than institutions within Cluster 1.

Our analysis also shows that Learning Analytics Solutions are struggling to gain traction in the market; only 9% of the Cluster 1 schools use this type of technology, while schools in the second cluster have avoided implementing these solutions entirely. Given the importance of understanding learning progress, especially in an increasingly digital environment, we would expect university leaders to rely on these solutions to measure, collect, analyze, and report data about learning outcomes.

The Bottom Line

Although we cannot tell from this analysis how interoperable the two types of digital ecosystems are, the types of technologies included may indicate that these schools have embraced these aspects of the Educause model of a Next Generation Digital Learning Ecosystem.

It is perhaps not surprising that larger—more often public—institutions have implemented more technology solutions in their digital learning ecosystems. They have to support more students and have the financial power to acquire more solutions than smaller institutions. Likewise, institutions focused on delivering online learning will prioritize those technologies that support these efforts more than other technologies.

What is striking about this analysis, however, is how so few institutions have implemented Learning Analytics Solutions. Although the term "learning analytics" entered extensive usage in 2010, this segment still struggles to achieve traction in higher education. Our take is that this is mainly because of a lack of a clear understanding of what these solutions do. This position may change as institutions learn more about identifying inputs, outputs, and the immediate activities that promote and ensure learning, especially around personalized and adaptive learning.

Institutional leaders should be aware of the importance of Learning Analytics in any digital learning ecosystem. While focusing on solutions that help deliver and support learning is important, it is also important to include solutions that help them understand learner progress across the entire ecosystem. Not doing so risks rendering the entire ecosystem meaningless, no matter how many other technologies exist within it or how interoperable those systems are.

Never Miss Your Wake-Up Call

Learn more about our team of expert research analysts here.

Eduventures Principal Analyst at Encoura

Contact

The Program Strength Assessment (PSA) is a data-driven way for higher education leaders to objectively evaluate their programs against internal and external benchmarks. By leveraging the unparalleled data sets and deep expertise of Eduventures, we’re able to objectively identify where your program strengths intersect with traditional, adult, and graduate students’ values, so you can create a productive and distinctive program portfolio.

Over the past three years, Eduventures has developed a behaviorally and attitudinally-based market segmentation of college-bound high school students we call Student Mindsets™. This year, the sample of nearly 40,000 respondents was drawn from the myOptions® database of college-bound high school juniors and seniors as well as institutional inquiry lists, allowing us to refine and validate the Mindsets in a national sample of unprecedented breadth and depth.

These market “Mindsets” get at students’ imagined paths through college by assessing the desired outcomes of college, perceived importance of college experiences, and key decision criteria at time of application.

The fundamental purpose of the Eduventures Prospective Student Research is to help institutions better understand how college-bound high school students approach one of the most important decisions of their young lives. For a teenager, the college decision looms as a complex make-or-break moment, a pivotal turn on an imagined path to adulthood.