Most discussions about digital content concentrate on pricing and cost. Proponents of open educational resources, for example, point to how these resources help students avoid the high cost of textbooks. Likewise, many textbook publishers have shifted to an inclusive access model where all students receive textbooks and the cost is included in tuition.

While cost is an essential aspect of decisions around the use of digital content in higher education, focusing on cost overlooks whether student use of digital content improves student success. For example, are students engaging with the content in ways that enhance their learning? This week’s Wake-Up Call will propose ways institutional leaders can address this question.

Rethinking Engagement

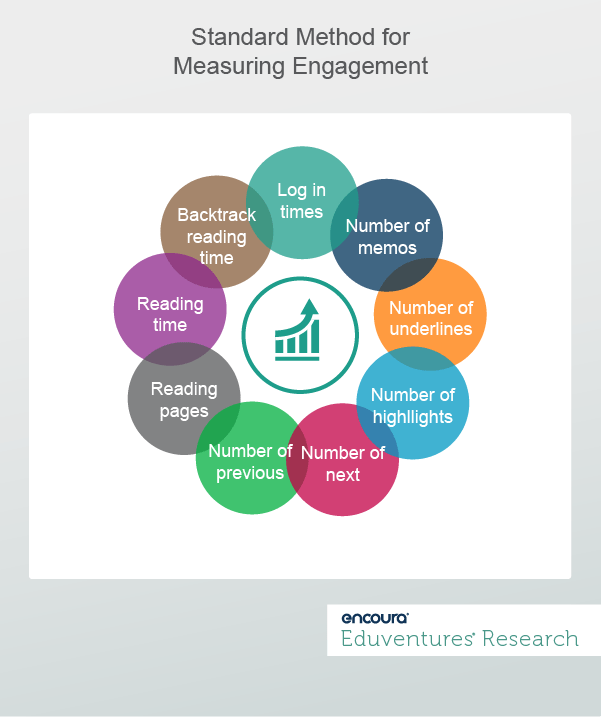

The usual way of measuring engagement with digital content resembles how we measure interactions with websites—by tracking logins, clicks, time spent on pages, or downloads. As shown in Figure 1, this method of measuring engagement concentrates on how students read, annotate, and move through the digital content. As part of its university-wide digital content adoption initiative, Indiana University deployed such an approach and found that higher engagement—measured by how often students read and highlighted digital content—

We can intuitively understand how this type of engagement would correlate to higher grades; students need to access and read the content for a course to succeed in it. But other indicators of engagement may provide a better way to measure the link between engagement and student success, such as study habits, involvement with other peers, interaction with faculty members, and participation in other educational activities (i.e., study groups). Likewise, as we know from the Courseware in Context initiative, a crucial part of quality instruction depends on the level of interaction students have with digital content, by going beyond interaction with the content itself to encourage critical reflections and analysis.

Therefore, as it relates to engagement and digital content, we should ask the following questions:

- Are students deploying digital content in their courses to discover and explore new content and information?

- Are students leveraging digital content to develop critical thinking and reflection?

- Are students making reflective connections between prior knowledge and digital content or creating meaning across ideas, experiences, and digital material?

Determining the indicators to measure engagement with digital content in these ways may be challenging. Still, it is critical to do so to answer the question of the relationship between digital content, engagement, and student success.

If we seek to answer these kinds of deeper questions, we can view the approach taken by Indiana University—tracking logins, clicks, time spent on pages, or downloads—as a practical and essential “first wave” in our foray into understanding the impact of digital content. Another approach, indicated by research done at the Open University in the United Kingdom, explores the intersection of digital content and learning analytics through content analysis, and brings us closer to answering the questions above.

This approach, defined as “methods for examining, evaluating, indexing, filtering, recommending, and visualizing different forms of digital learning content,” goes beyond interaction data. It also includes academic data (student academic history, essay grades) and student behavioral data (discussion posts, quiz attempts, essay feedback, peer learning activities, and polls), to provide a rich picture of the connection between student achievement and student engagement with digital content. Perhaps captured via collaboration tools or learning management systems, this type of data can integrate with data located in other solutions (like class attendance) to help institutional leaders understand the role digital content plays in helping their students succeed.

The Bottom Line

Many student success indicators are clear and accepted. For example, all institutions know how to track retention rates or graduation rates. But understanding how to track success in teaching and learning is more opaque, especially since deciding on the right indicators is challenging. The likely indicators (grades, attendance, etc.) appear in disparate systems across an institution’s ecosystem, making it hard to view them in a centralized way.

Yet, understanding how to track student success in learning is critical to improving the delivery of quality digital instruction. And the essential steps are only partly technological. Instructional leaders, for example, must shift from thinking of engagement as simply student interaction with digital content, like clicking on links, toward understanding how students leverage digital content to improve critical thinking, the discovery of new ideas, and connecting prior knowledge with what they have learned from the digital content.

Likewise, vendors of online course solutions should understand that the interactions-related data they collect—while important—do not help institutions gain insight into this deeper type of engagement. Last, technology leaders should consider integrating data from online course solutions with that of other sources (like learning management systems) to paint a more accurate picture of how students engage with digital content. Without taking these steps, institutions risk missing out on the promise of understanding how student use of digital content impacts student success.

Never Miss Your Wake-Up Call

Learn more about our team of expert research analysts here.

Eduventures Principal Analyst at Encoura

Contact

This recruitment cycle challenged the creativity of enrollment teams as they were forced to recreate the entire enrollment experience online. The challenge for this spring will be getting proximate to admitted students by replicating new-found practices to increase yield through the summer’s extended enrollment cycle.

By participating in the Eduventures Admitted Student Research, your office will gain actionable insights on:

- Nationwide benchmarks for yield outcomes

- Changes in the decision-making behaviors of incoming freshmen that impact recruiting

- Gaps between how your institution was perceived and your actual institution identity

- Regional and national competitive shifts in the wake of the post-COVID-19 environment

- Competitiveness of your updated financial aid model